ChatGPT recently took the world by storm, shaking not only the technology industry as we know it, but also the lives of all individuals, from business professionals to students. We have all recently seen AlphaFold, the newest artificial intelligence (AI) technology that predicts the 3D fold of a protein given a sequence of amino acids. Yet, in this recent installment in the next chapter of “scary” technology, we see that AI is not actually the eerily humanistic robots that are seen in futuristic shows, but rather a new take on maximizing efficiency in the world of biotechnology to improve health as we know it. From looking at early disease detection to new drug discovery, AI has multiple applications in biotechnology.

What is AI?

In layman’s terms, AI is a way of training a computer to make complex decisions with large sets of data to simulate human intelligence (or far greater intelligence). In the field of biotechnology specifically, this has massive applications. We could go from using multiple cell cultures with time-intensive processing in a wet lab to being able to predict all of these results with AI on a screen, as seen with companies like Genesis Therapeutics or Exscientia. By applying AI for drug discovery, they create safer drugs for the industry. While we have seen current applications with AI in applications like Siri, one of the first virtual assistants, and the TikTok algorithms that encourage us to keep scrolling, biotechnology is still very early in adopting these techniques that can transform the industry.

When using terms like AI, we often also hear about machine learning (ML). ML can generally be thought of as the process by which you build artificial intelligence. This typically involves building complex statistical learning models to teach a computer to make complex decisions on various data and desired goals.

A big area of focus in machine learning techniques is building proper training data sets (used to train the model initially) and testing data sets (used to validate the model after it’s been trained.) Here the common phrase “garbage in, garbage out” applies like most other analyses: If you feed the model bad data, or don’t validate properly, your model won’t provide useful results. Once we identify those data sets, we start off by feeding the model training data sets, which are used to teach the machine how things work. Then, to test the validity of the “learning” aspect, we use our testing data sets to ensure that the machine has truly learned what we want it to learn.

How is AI used in biotechnology?

You may have heard of ChatGPT acing the SAT, or understanding how to write a complex essay, but have you ever heard of ChatGPT successfully creating a new, never-seen-before protein given a specific algorithm? AI is now used in every stage of biotech and healthcare today. From drug discovery advancements to precision medicine, AI in biotechnology is a great asset that more companies are beginning to employ to increase the efficiency of their operations.

We can see it in clinical hospital settings, where AI maximizes a patient’s outcome by using algorithms to interpret patient data, or the research and development side of biotechnology, such as using it for various breakthroughs. With various algorithms in place based on data from years of clinical operations, AI can provide recommendations that eliminate bias, fatigue, or other complications that can impact the quality of a decision.

We can see more promising results earlier in the drug discovery process. One specific subset of AI is deep learning, a process where a complex computer can be able to solve problems with multiple layers. Think of deep learning as a neural network with multiple layers, or the closest we’ve come to simulating a literal human brain with code. With deep learning, we have seen improvements in various tasks, such as predicting how a protein can fold given information about all the possible amino acid combinations for each fold. The latest breakthrough in this subject was a recent discovery with Profluent, a platform that is currently using algorithms with protein folding and structure to predict novel proteins. (Yes, you heard that right!)

Challenges and Future Prospects

While AI presents many new possibilities, it also presents new challenges that you may have read about–people are worried about the replacement of jobs, and the ethics of AI have legitimate critiques to debate. Specifically, when it comes to biotech, there are practical challenges that need to be overcome to realize the power of AI at scale across the industry.

First and foremost, we have to think about the limitations of various integration tactics that would be mobilized. When looking at data types, it is important that we acknowledge the type of data that we are drawing conclusions from, and thus subsequently develop a system that would allow us to first sort through this data. For example, a lot of the databases draw from a larger proportion of patients who have a certain disease type, creating potential factors that can hinder the accuracy of our ML outputs. If our data is inherently biased, we cannot apply these clinical results replicated with ML to the general public.

Secondly, research is a consistently regulated process that includes the Food and Drug Administration (FDA), even though AI and ML are not dealing first-hand with real, live organisms. While there are currently limited FDA guidelines about the role of ML in research, there are some published for Good Machine Learning Practices, or GMLP, to give companies working in this space guidelines on how to operate AI models.

One area that is universal for any AI model is a general idea regarding model drift, where ML models alter their outputs over time given the data inputted. Thus, there should be alterations to these models over time as we adapt, adjust, and improve them to reflect the real-world data they’re receiving. The frequency of these updates depends on the data being ingested and the desired results from the model, and monitoring needs to be established in production to measure this drift over time. Particularly for health-related AI models, it’s crucial to closely monitor any drift, and be vigilant about continuously updating them to produce consistent results.

Lastly, what about troubleshooting? How can we verify that AI produces accurate results without having to spend extra time fact-checking every single output? It is in fact impossible to do this, hence the term “black box” when describing AI models. This raises many questions about the credibility of AI, even though it is backed by data. For instance, while many AI models are trained on clinical study data, one thing that AI may fail to account for is bias. In fact, AI may actually propagate the same biases that exist in all of the data that is used to train the same machines. Being able to interrogate models in the biotechnology space will be crucial to ensure bias is eliminated as best as possible in each stage of life sciences research.

As you can see, the recent advancements in AI have tremendous applications across biotech; with AI, we can change every aspect of how biotech R&D operates today. The question is – how do you get started? This is one of the questions we get asked most often.

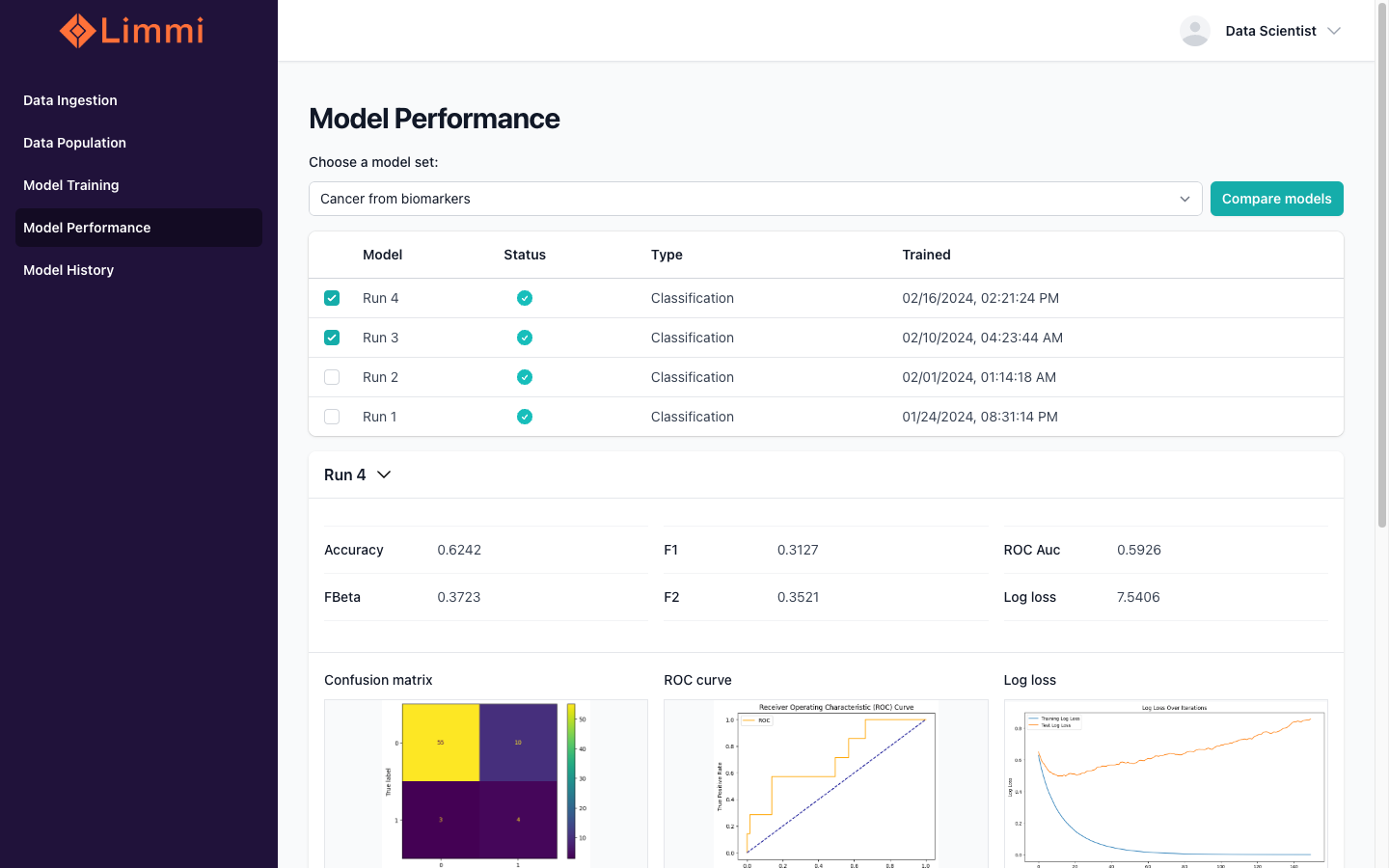

Here’s where Limmi comes in – with both expertise in AI model development and an intuitive ML platform for biotech, Limmi is the perfect partner for your research. Teams who are looking to rapidly develop AI models to transform their lab and clinical research can find ease in using our modern technology. Anyone from various levels of backgrounds in coding can develop, train, deploy, and monitor AI models for any type of work that requires large data analysis. From genetic research to predictive clinical outcomes, Limmi can be used across all stages of development. Our platform is adaptable to nearly any type of data you focus on, and it greatly decreases the amount of time you spend organizing data or building deployment infrastructure and increases the time you spend making an impact on patient outcomes.

We believe the future of AI in healthcare is a truly transformational story; we can enable massive improvements in nearly every phase of healthcare. We’d love to talk to you and hear about your thoughts on both the promise and challenge of AI, and if you’ve been thinking of using AI in your practice or business, we’d love to help.

References

Eisenstein, Michael. “AI-Enhanced Protein Design Makes Proteins That Have Never Existed.” Nature News, Nature Publishing Group, 23 Feb. 2023, https://www.nature.com/articles/s41587-023-01705-y.

Weissler, E Hope, et al. “The Role of Machine Learning in Clinical Research: Transforming the Future of Evidence Generation.” Trials, U.S. National Library of Medicine, 16 Aug. 2021, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8365941/.